网络爬虫——前程无忧网数据获取及存储(高级)

实验内容1

目标网站:前程无忧招聘网

目标网址:https://search.51job.com/list/120000,000000,0000,00,9,99,Python,2,1.html

目标数据:(1)职位名(2)公司名(3)工作地点(4)薪资 (5)发布时间

要求

(1)使用urllib或requests库实现该网站网页源代码的获取,并将源代码进行保存;

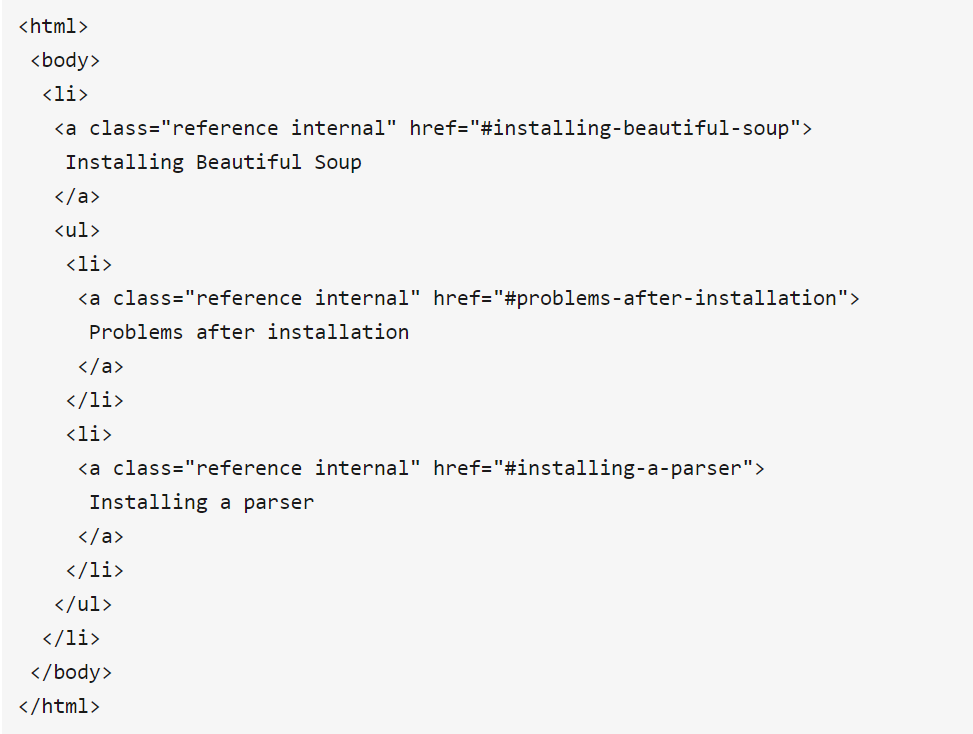

(2)自主选择re、bs4、lxml中的一种解析方法对保存的的源代码读取并进行解析,成功找到目标数据所在的特定标签,进行网页结构的解析;

(3)定义函数,将获取的目标数据保存到txt,csv文件中。

(4)使用框架式结构,通过参数传递实现整个特定数据的爬取。

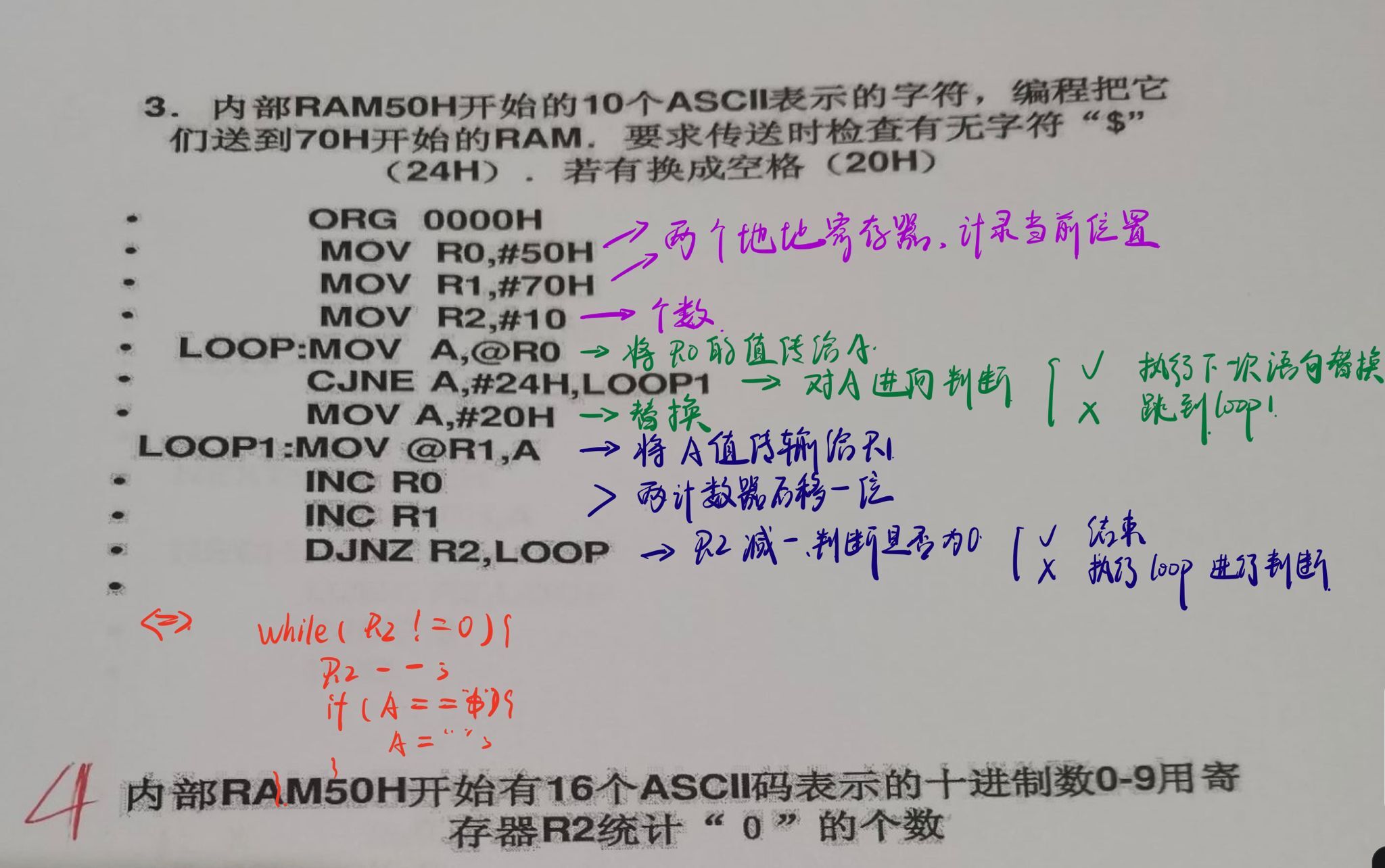

源码

import requests

import json

import csv

from requests.exceptions import RequestException

from lxml import etree

def getHtmlText(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36 Edg/80.0.361.69'

}

try:

result = requests.get(url,headers=headers,timeout=30)

result.raise_for_status()

result.encoding = result.apparent_encoding

return result.text

except:

return ""

def parsePage(html):

ulist = []

clist = []

rlist = []

newhtml =etree.HTML(html,etree.HTMLParser())

result=newhtml.xpath('//*[@id="resultList"]/div[@class="el"]//text()')

for i in range(len(result)):

ulist.append(result[i].replace(" ","").replace('\r',"").replace("\n",''))

while '' in ulist:

ulist.remove('')

length = len(ulist)

weight = int(length / 5 )

for i in range(weight):

for j in range(5):

clist.append(ulist[i*5+j])

rlist.append(clist)

clist = []

return rlist

# def txtdata(data):

# with open('top20.txt','w')as file:

# for i in data:

# for j in i:

# print(j)

# print('successful')

def storedata(data):

with open('top20.txt','w',encoding = 'utf-8')as file:

for i in data:

file.write(json.dumps(i,ensure_ascii=False)+'\n')

print('ok')

def csvdata(data):

with open('top20.csv','w',encoding = 'utf-8',newline='')as csvfile:

fieldnames = ['职位名','公司名','工作地点','薪资','工作时间']

writer = csv.DictWriter(csvfile,fieldnames=fieldnames)

writer.writeheader()

for i in data:

writer.writerow({'职位名':i[0],'公司名':i[1],'工作地点':i[2],'薪资':i[3],'工作时间':i[4]})

print('ok')

def main():

url="https://search.51job.com/list/120000,000000,0000,00,9,99,Python,2,1.html"

html=getHtmlText(url)

rlist=parsePage(html)

# txtdata(data)

storedata(rlist)

csvdata(rlist)

main()

结果输出: